UUIDs, Universally Unique IDs, are handy 128 bit IDs. Their values are unique, universally, hence the name.

(If you work with Microsoft, you call them GUIDs. I do primarily think of them as GUIDs, but I’m going to stick with calling them UUIDs for this article, as I think that name is more common.)

These are useful for IDs. Thanks to their universal uniqueness, you could have a distributed set of machines, each producing their own IDs, without any co-ordination necessary, even completely disconnected from each other, without worrying about any of those IDs colliding.

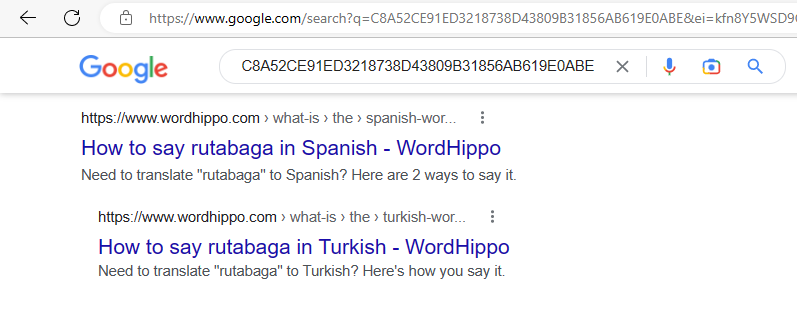

When you look at a UUID value, it will usually be expressed in hex and (because reasons) in hyphen-separated groups of 8-4-4-4-12 digits.

–7–

You can tell which type of UUID it is by looking at the highlighted digit, the first of the middle of the four-digit blocks. That digit always tells you which type of UUID you’re looking at. This one is a type 7 because that hex-digit is a 7. If it was a 4 it would be a type 4.

As I write this, there are 8 types to chose from. But which type should you use? Type 7. Use type 7. If that’s all you came for, you can stop here. You ain’t going to need the others.

Type 7 – The one you actually want.

This type of UUID was designed for assigning IDs to records on database tables.

The main thing about type 7 is that the first block of bits are a time stamp. Since time always goes forward [citation needed] and the timestamp is right at the front, each UUID you generate will have a bigger value than the last one.

This is important for databases, as they are optimized for “ordered” IDs like this. To oversimplify it, each database table has an index tracking each record by its ID, allowing any particular record to be located quickly by flipping through the book until you get close to the one you wanted. The simplest place to add a new ID is to add it on the end and you can only do that if your new ID comes after all the previous ones. Adding a new record anywhere else will require that index to be reorganised to make space for that new one in the middle.

(You often see UUIDs criticised for being random and unordered, but that’s type 4. Don’t use type 4.)

The timestamp is 48 bits long and counts the number of milliseconds since the year 1970. This means we’re good until shortly after the year 10,000. Other than the 6 bits which are always fixed, the remaining 74 bits are randomness which is there so all the UUIDs created in the same millisecond will be different. (Except it is a little more complicated than that. Read the RFC.)

So there we are. Type 7 UUIDs rule, all other types drool. We done?

Migrating from auto-incrementing IDs.

Suppose you have an established table with a 32-bit auto-incrementing integer primary key. You want to migrate to type 7 UUIDs but you still need to keep the old IDs working. A user might come along with a legacy integer ID and you still want to allow that request to keep working as it did before.

You could create a bulk of new type 7 UUIDs and build a new table that maps the legacy integer IDs to their new UUID. If that works for you, that’s great, but we can do without that table with a little bit of cleverness.

Let’s think about our requirements:

- We want to deterministically convert a legacy ID into its UUID.

- These UUIDs are in the same order as the original legacy IDs.

- New record’s UUIDs come after all the UUIDs for legacy records.

- We maintain the “universally unique”-ness of the IDs.

This is where we introduce type 8 UUIDs. The only rule of this type is that there are no rules. (Except they still have to be 128 bits and six of those bits must have fixed values. Okay, there are a few rules.) It is up to you how you construct this type of UUID.

Given our requirements, let’s sketch out how we want to layout the bits of these IDs.

The type 7 UUIDs all start with a 01 byte, until 2039 when they will start 02. They won’t ever start with a 00 byte. So to ensure these IDs are always before any new IDs, we’ll make the first four hex digits all zeros. The legacy 32-bit integer ID can be the next four bytes.

Because we want the UUIDs we create to be both deterministic and universally-unique, the remaining bits need to look random but not actually be random. Running a hash function over the ID and a fixed salt string will produce enough bits to fill in the remaining bits.

Now, to convert a legacy 32-bit ID into its equivalent UUID, we do the following:

- Start an array of bytes with two zero bytes.

- Append the four bytes of legacy ID, most significant byte first.

- Find the SHA of (“salt” + legacy ID) and append the first 10 bytes of the hash to the array.

- Overwrite the six fixed bits (in the hash area) to their required values.

- Put the 16 bytes you’ve collected into a UUID type.

And there we have it. When a user arrives with a legacy ID, we can deterministically turn it into its UUID without needing a mapping table or conversion service. Because of the initial zero bytes, these UUIDs will always come before the new type 7 UUIDs. Because the legacy ID bytes come next, the new UUIDs will maintain the same order as the legacy IDs. Because 74 bits come from a hash function with a salt as part of its input, universal-uniqueness is maintained.

What’s that? You need deterministic UUIDs but it isn’t as simple as dropping the bytes into place?

Deterministic UUIDs – Types 3 and 5.

These two types of UUID are the official deterministic types. If you have (say) a URL and you want to produce a UUID that represents that URL, these UUID types will do it. As long as you’re consistent with capital letters and character encoding, the same URL will always produce the same UUID.

The down-side of these types is that the UUID values don’t even try to be ordered, which is why I wrote the discussion of type 8 first. If the ordering of IDs is important, such as using them as primary keys, maybe think about doing it a different way.

Generation of these UUIDs work by hashing together a “namespace” UUID and the string you want to convert into a UUID. The hash algorithm is MD5 for type 3 or SHA1 for type 5. (In the case of SHA1, everything after the first 128 bits of hash are discarded.)

To use these UUIDs, suppose a user makes a request with a string value, you can turn that string into a deterministic UUID by running it through the generator function. That function will have two parameters, a namespace UUID (which could be a standard namespace or one you’ve invented) and the string to convert. That function will run the hash function over the input and return the result as a UUID.

These UUID types do the job they’re designed to do. Just as long as you’re okay with the values not being ordered.

Type 3 (MD5) or Type 5 (SHA1)?

There are pros and cons to each one.

MD5 is faster than SHA1. If you’re producing them in bulk, that may be a consideration.

MD5 is known to be vulnerable to collisions. If you have (say) a URL that hashes to a particular type 3 UUID, someone could construct a different URL that hashes to the same UUID. Is that a problem? If you’re the only one building these URLs that get hashed, then a hypothetical doer of evil isn’t going to get to have their bad URL injected in.

Remember, the point of a UUID is to be an ID, not something that security should be depending upon. Even the type 5 UUID throws away a big chunk of the bits produced, leaving only 122 bits behind.

If you want to hash something for security, use SHA256 or SHA3 and keep all the bits. Don’t use UUID as a convenient hashing function. That’s not what its for!

On balance, I would pick type 5. While type 3 is faster, the difference is trivial unless you’re producing IDs in bulk. You might think that MD5 collisions are impossible with the range of inputs you’re working with, but are you quite sure?

Type 4 – The elephant in the room

A type 4 UUID is one generated from 122 bits of cryptographic quality randomness. Almost all UUIDs you see out there will be of this type.

Don’t use these any more. Use type 7. If you’re the developer of a library that generates type 4 UUIDs, please switch it to generating type 7s instead.

Seriously, I looked for practical use cases for type 4 UUIDs. Everything I could come up was either better served by type 7, or both types came out as the same. I could not come up with a use-case where type 4 was actually better. (Please leave a comment if you have one.)

Except I did think of a couple of use-cases, but even then, you still don’t want to use type 4 UUIDs.

Don’t use UUIDs as secure tokens.

You shouldn’t use UUIDs as security tokens. They are designed to be IDs. If you want a security token, you almost certainly have a library that will produce them for you. The library that produces type 4 UUIDs uses one internally.

When you generate a type 4 UUID, six bits of randomness are thrown away in order to make it a valid UUID. It takes up the space of a 128 bit token but only has 122 bits of randomness.

Also, you’re stuck with those 122 bits. If you want more, you’d have to start joining them together. And you should want more – 256 bits is a common standard length for a reason.

But most of all, there’s a risk that whoever wrote the library that generates your UUIDs will read this article and push out a new version that generates type 7 UUIDs instead. Those do an even worse at being security tokens.

I’m sure they’d mention it in that library’s release notes but are you going to remember this detail? You just want to update this one library because a dependency needs the new version. You tested the new version and it all works fine but suddenly your service is producing really insecure tokens.

Maybe the developers of UUID libraries wouldn’t do that, precisely because of the possibility of misuse, but that’s even more reason to not use UUIDs as security tokens. We’re holding back progress!

In Conclusion…

Use type 7 UUIDs.

Picture Credits.

📸 “Night Ranger…” by Doug Bowman. (Creative Commons)

📸 “Cat” by Adrian Scottow. (Creative Commons)

📸 “Cat-36” by Lynn Chan. (Creative Commons)

📸 “A random landscape on a random day” by Ivo Haerma (Creative Commons)

📸 “Elena” by my anonymous wife. (With Permission)